Assessment Literacy – A Workshop Series for Language Teachers and Researchers at the Arqus Universities

As part of the series in language and cross-cultural competence for staff at partner universities, Leipzig University organises four separate thematic workshops in the months of November and December.

Multilingual universities need a reliable assessment and evaluation of foreign language competence based on comparable standards. This workshop series is aimed at language teachers and researchers and will equip them with key competencies in the area of assessment literacy. We will deal with quality criteria in test development, statistical procedures for the evaluation of tests and task quality, the reliable linking of tasks to the Common European Framework of Reference for Languages and challenges that digitalisation (also) brings to language teaching: e-cheating.

Workshop format: Online (Zoom).

Registration: Please register at least 6 days before the date of the respective workshop. You will then be informed with sufficient advance notice whether we can confirm a place and you will also receive all further information, e.g. Zoom link, via e-Mail.

Fee: The workshop is free of charge for staff members of the Arqus Alliance partner universities.

Contact: For any enquiries, please contact Jupp Möhring.

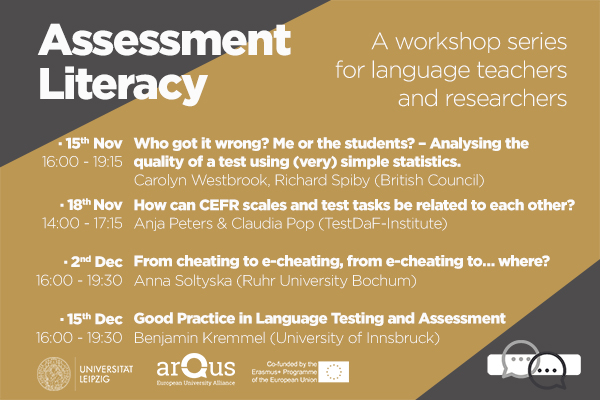

Date and time (CET):

- Who got it wrong? Me or the students? – Analysing the quality of a test using (very) simple statistics. Carolyn Westbrook, Richard Spiby (British Council). 15.11.2021, 16:00 – 19: 15.

- How can CEFR scales and test tasks be related to each other? Anja Peters & Claudia Pop (TestDaF-Institute). 18.11.2021, 14:00 – 17:15.

- From cheating to e-cheating, from e-cheating to… where? Anna Soltyska (Ruhr University Bochum). 02.12.2021, 16:00 – 19:30.

- Good Practice in Language Testing and Assessment. Benjamin Kremmel (University of Innsbruck). 15.12.2021, 15:00 – 18:15.

Detailed Programme & Registration:

- Who got it wrong? Me or the students? – Analysing the quality of a test using (very) simple statistics. Carolyn Westbrook (British Council). 15.11.2021, 16:00 – 19: 15.

When we design a test, we think (hope) we have done a good job. We want the test to distinguish between learners at different levels and to be a fair reflection of the learners’ actual ability. But how do we know whether we have achieved this? We may get some idea while scoring the test but with large numbers of students (particularly if tests are marked anonymously), we may not be able to tell if the good students are doing well and the weaker students are struggling. So how do we find out?

This workshop will introduce some basic statistics for evaluating the quality of tests. For receptive skills tests, we will look at what item facility and item discrimination are, how to calculate these and how to interpret them. We will then discuss the importance of rater reliability for productive skills tests and assessments, how to calculate this and how to interpret it. Ideally, participants should have access to Excel during the session.

Deadline: 9 November 2021.

- How can CEFR scales and test tasks be related to each other? Anja Peters & Claudia Pop (TestDaF-Institute). 18.11.2021, 14:00 – 17:15.

This workshop will provide participants with guidance on how to relate test tasks for receptive skills to the CEFR. After a brief introduction to the purpose, content and impact of the CEFR and its Companion Volume, we will analyse selected scales and descriptors from the Companion Volume in order to gain a better understanding of key characteristics of the levels, in particular the “plus” levels. This will be followed by a discussion of sample tasks with regard to their strengths, weaknesses and relation to specific CEFR levels. Finally, we will consider the item writing process, for example, how to increase or decrease the difficulty of test tasks and items.

Deadline: 12 November 2021.

- From cheating to e-cheating, from e-cheating to… where? Anna Soltyska (Ruhr University Bochum). 02.12.2021, 16:00 – 19:30.

The unprecedented shift from face-to-face, on-campus activities to those taking place online has affected nearly all areas of higher education during the recent Covid-19 pandemic, and teaching, learning and assessing foreign languages is not an exception.

Understandably, moving assessment practices online and safeguarding their high quality at the same time has proven challenging for all stakeholders. Among others, major concerns have been voiced about the reliability of newly developed and often insufficiently piloted assessment tasks which seem not cheating-proof and thus easily manipulated or misused.

The workshop will deal with the broad concept of assessment-related malpractice in the context of language testing in higher education. The participants will be given the opportunity to share their and their institutions’ experiences with the phenomenon of (e-) cheating and work towards feasible measures to reduce it in their contexts thus further ensuring high quality of assessment services. This goal is hoped to strengthen the Arqus university alliance through closer collaboration among language experts and language centres and reinforce mutual recognition of language certificates issued by member universities.

The workshop will be offered via Zoom and will last 2×90 minutes. The first part titled “From cheating to e-cheating” will be devoted to the gradual transition from analogue to digital malpractice and relevant countermeasures that have been proposed, if not implemented, so far. In the following part titled ‘From e-cheating to… where?” participants will discuss current developments in e-cheating and their implications on shaping valid and reliable assessment procedures of tomorrow.

Deadline: 26 November 2021.

- Good Practice in Language Testing and Assessment. Benjamin Kremmel (University of Innsbruck). 15.12.2021, 15:00 – 18:15.

Language tests are ubiquitous in educational contexts. But what distinguishes useful language tests from the multitude of tests that generate little valuable information for language teachers or learners? How can we better understand and evaluate language tests? And what should we look for when we want to create useful tests ourselves?

The workshop will address these fundamental questions and will try to illustrate important principles of good practice in language testing. Different purposes of testing and their implications will be explained, and practical guidelines for creating useful language tests will be presented through an introduction to key quality criteria and hands-on tools and techniques for test development such as the development of test specifications.

Deadline: 9 December 2021.

Please note: In order to ensure the quality of the session and for the benefit of the participants, we limit the number of participants for each workshop to a maximum of 21 people. There are three workshop places per partner university and per workshop, and those will be allocated on a first-come, first-served basis. If there are less than three registrations from one university, the remaining places will be allocated in list order regardless of affiliation to a partner university.